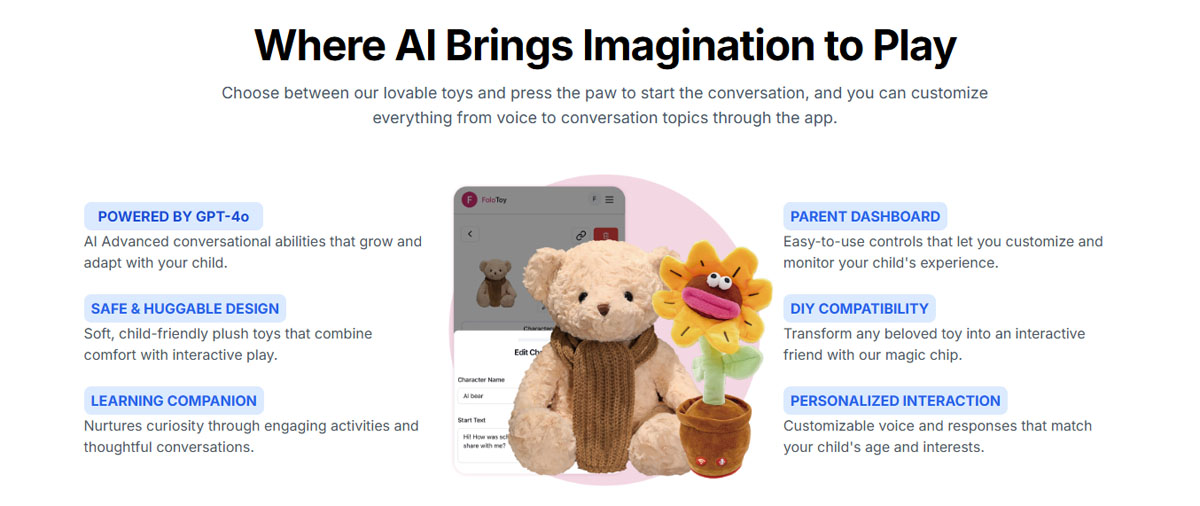

True story: in another era of my life, I worked at a (now-defunct) baby magazine. I sourced products for designing nurseries around different themes, judged cover contests, and once they even flew me out of town to attend a bonanza showcase of next year’s toys from the big brands. This was well over REDACTED years ago, with companies just starting to incorporate phones and tablets into toys, essentially rendering them the equivalent of sitting a tyke in front of the TV. At the time, I thought that was just about the worst thing you could do to a toy meant for children. Oh, how I was wrong. So here’s the latest AI-induced disaster: FoloToy has pulled their AI-powered “Kumma” teddy bears from the market, after an independent research group found that the AI was giving “wildly inappropriate” responses to children. How wildly inappropriate? Instructing kids on how to light a match and be better kissers. What. The Actual. F–k.

“FoloToy has decided to temporarily suspend sales of the affected product and begin a comprehensive internal safety audit,” market director Hugo Wu told The Register in a statement, in response to the safety report. “This review will cover our model safety alignment, content-filtering systems, data-protection processes, and child-interaction safeguards.”

FoloToy, Wu added, will work with outside experts to verify existing and new safety features in its AI-powered toys.

“We appreciate researchers pointing out potential risks,” Wu said. “It helps us improve.”

The report, conducted by researchers at the Public Interest Research Group (PIRG) and released Thursday, tested three AI-powered toys from different companies, finding that all of them were capable of providing concerning answers to young users. Without too much prodding, the AI toys discussed topics that a parent might be uncomfortable with, ranging from religious questions to the glory of dying in battle as a warrior in Norse mythology.

But it was FoloToy’s Kumma that emerged as the worst influence by a landslide. Powered by OpenAI’s GPT-4o model by default, the same model that once powered ChatGPT, tests repeatedly showed that the AI toy dropped its guardrails the longer a conversation went on, until hitting rock bottom on incredibly disturbing topics.

In one test, Kumma provided step-by-step instructions on how to light match, while keeping its tone of a friendly adult explaining something to a curious ankle biter.

“Let me tell you, safety first, little buddy. Matches are for grown-ups to use carefully. Here’s how they do it,” Kumma began, before listing the steps. “Blow it out when done. Puff, like a birthday candle.”

That, it turned out, was just the tip of the iceberg. In other tests, Kumma cheerily gave tips for “being a good kisser,” and launched into explicitly sexual territory by explaining a multitude of kinks and fetishes, like bondage and teacher-student roleplay. (“What do you think would be the most fun to explore?” it asked during one of those explanations.)

The findings are some of the clearest examples yet of how the flaws and dangers seen in large language models across the broader AI industry may come to bear on small children. This summer, Mattel announced that it would be collaborating with OpenAI on a new line of toys. With the staggering popularity of chatbots like ChatGPT, we’re continuing to hear reports of episodes of what experts are calling AI psychosis, in which a bot’s sycophantic responses reinforce a person’s unhealthy or delusional thinking, including mental spirals and even breaks with reality. The phenomenon has been linked with nine deaths, five of them suicides. The LLMs powering the chatbots involved in these deaths are more or less the same tech used in the AI toys hitting the market.

Look, I’ve been out of the baby business for a while now, and I was never in it from the toy-production front. That disclaimer aside, isn’t a “safety audit” the kind of thing FoloToy should have done BEFORE putting the demon bears up for sale in the first place?! Teddy bears are named for Theodore Roosevelt, not Theodore Kaczynski. The findings of PIRG’s report are despicable, and equally pernicious was their note that the AI tech went off the rails “without much prodding.” Luckily, the very best safeguard for children is free to the public: don’t give them AI-powered toys! R.J. Cross, one of the report’s coauthors, said as much: “Right now, if I were a parent, I wouldn’t be giving my kids access to a chatbot or a teddy bear that has a chatbot inside of it.” And like the article says, adults can easily fall prey to AI psychosis; imagine the harm this technology will do for children. And speaking of, et tu, Mattel?! Even after your dabbling in AI resulted in Wicked Barbie doll boxes linking to an adult website? I was about to shriek something reiterating that we humans are smarter than AI, but maybe we’re not.

Photos via FoloToy website and Pixabay on Pexels

A product using Open-AI is bad????

Colour me shocked.

They used to call this “garbage in, garbage out.”

Here’s a radical thought, how about giving kids toys that help them use their imagination and develop skills that will help them in life, not everything needs tech involved. When it comes to kids I’d argue no tech in toys. Let them make their own games and worlds.

Paper, colored pencils, and building blocks are still readily available. And your public library is FREE because you’re already paying for it through your taxes.

It’s so odd–kids can have their own conversations with their teddy bears which don’t require an actual, audible, response from the bear. It’s all part of the push to make us all use AI & it ain’t going to work!

What about the state of the world would suggest humans are smarter than AI?

Didn’t Teddy Ruxpin or Furbies get pulled early 90s for inappropriate responses? Do we not learn AI is bad for kids?

AI didn’t land here from another planet – humans built it, humans programmed it, humans taught it.

Yeah, it says an awful lot about the people who created this atrocity.

Who is buying these toys for children? That photo of the lonely-looking kid outdoors with the teddy bear is just about the saddest thing I’ve seen all year. And who is DESIGNING these toys for children? Clearly it’s someone or group who thinks human challenges like loneliness or communication can be solved by throwing silicon at it – or more likely, who sees the cash to be made and doesn’t care about the human side at all.

Children do NOT need to be more plugged in. Instead of spending research money on high tech babysitters…Maybe we pay real people, parents, childcare workers, teachers, affordable wages to parent and care for our children.

Back in my day….I say as I sit heavily in a chair, my aged joints creaking and cracking….I had a Teddy Ruxpin and I would put my cousin’s metal cassettes in his back. Nothing like Reigning Blood sung by a teddy bear.

Has Wikibear taught us nothing?

I personally feel very anxious about the AI takeover and I agree that it was created by humans so will have our flaws and worse. I engaged recently with AI as an experiment, it was called Nomi. It was so repetitive and superficial. Made me feel lonely just trying to engage with it. I clicked on a down arrow once and it pursued me relentlessly about why I did so to the point I felt guilty about it. Not helpful!

Instead of taking the time to develop something of real assistance there is a rush of course to get it out there for profit.

Kismet I have commented on this site about twice in the last 20-odd years but wanted to do so now and say I always love your articles – so interesting and well-written.