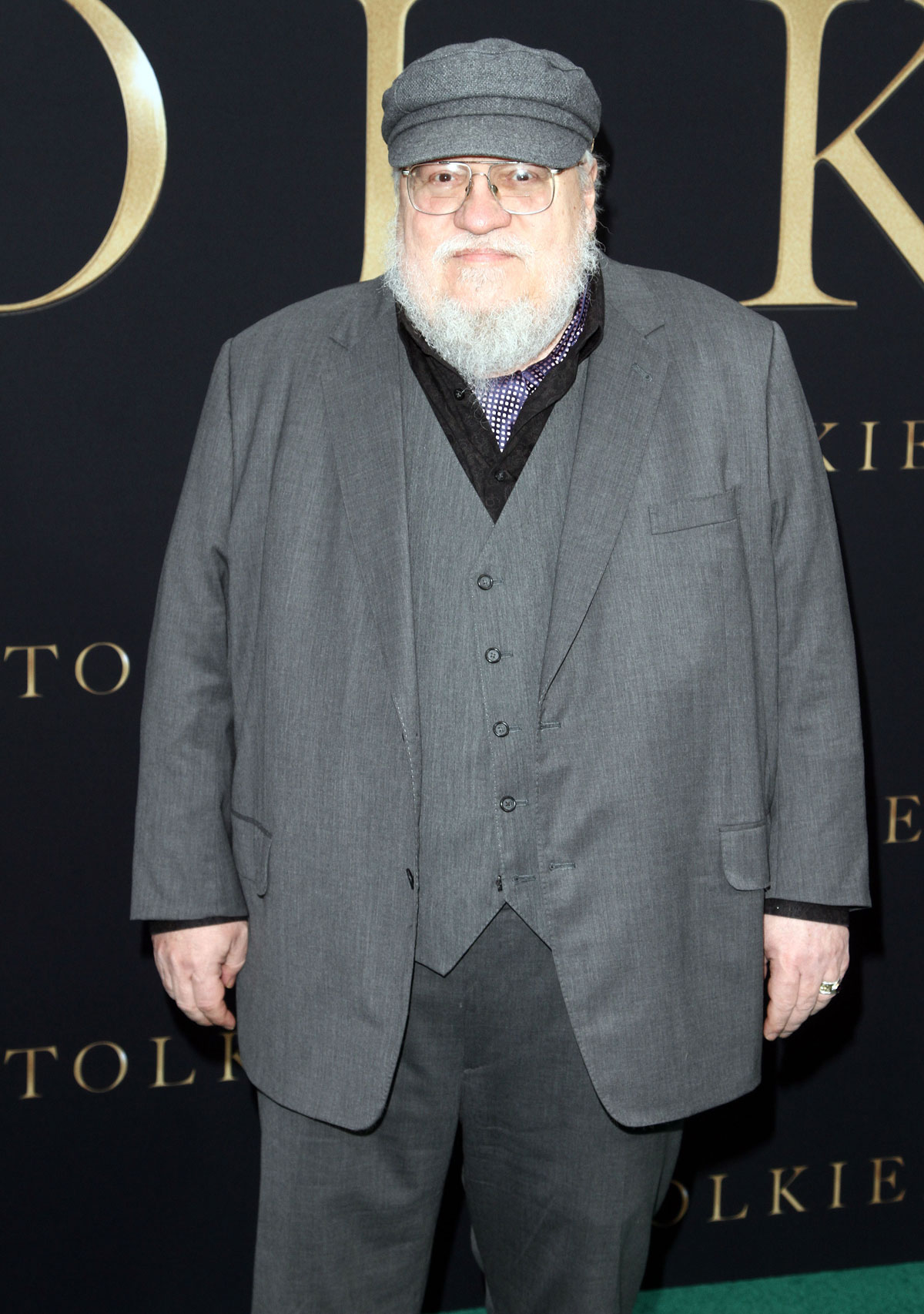

Another week, another copyright infringement lawsuit against AI. Last week we covered prominent writers filing suit in San Francisco against Meta (the artist formerly known as Facebook) for using works without the authors’ consent to train Meta’s LLaMA. Sadly, we’re not talking about the animal, but Large Language Model Meta AI. This week we cross the country to New York City where a new team of writers — including George R.R. Martin, Jodi Picoult, Jonathan Franzen, and John Grisham — are suing OpenAI for similarly using works without consent to train ChatGPT. To the AI Company CEOs facing legal consequences for their theft, I say in my best Alexis Rose voice: I love this journey for you.

A group of prominent U.S. authors, including Jonathan Franzen, John Grisham, George R.R. Martin and Jodi Picoult, has sued OpenAI over alleged copyright infringement in using their work to train ChatGPT.

The lawsuit, filed by the Authors Guild in Manhattan federal court on Tuesday, alleges that OpenAI “copied Plaintiffs’ works wholesale, without permission or consideration… then fed Plaintiffs’ copyrighted works into their ‘large language models’ or ‘LLMs,’ algorithms designed to output human-seeming text responses to users’ prompts and queries.”

The proposed class-action lawsuit is one of a handful of recent legal actions against companies behind popular generative artificial intelligence tools, including large language models and image-generation models. In July, two authors filed a similar lawsuit against OpenAI, alleging that their books were used to train the company’s chatbot without their consent.

Getty Images sued Stability AI in February, alleging that the company behind the viral text-to-image generator copied 12 million of Getty’s images for training data. In January, Stability AI, Midjourney and DeviantArt were hit with a class-action lawsuit over copyright claims in their AI image generators.

Microsoft, GitHub and OpenAI are involved in a proposed class-action lawsuit, filed in November, which alleges that the companies scraped licensed code to train their code generators. There are several other generative AI-related lawsuits currently out there.

“These algorithms are at the heart of Defendants’ massive commercial enterprise,” the Authors Guild’s filing states. “And at the heart of these algorithms is systematic theft on a mass scale.”

“…which alleges that the companies scraped licensed code to train their code generators.” Wait, what? I’m not sure if I’m smart enough to get this right. Are they saying that the companies stole code, to train their machines in code making? Talk about meta! I do acknowledge that this technology can be incredibly smart, if so endowed by its human creators. We’ve also seen evidence this year of how AI can be (inexplicably) irrational — remember the Bing Chatbot Sydney who tried to break up a marriage? Given these equal capacities for intelligence and emotion, I have a thought here. If AI is trained on stolen code, could it eventually understand that the material was stolen? And if so, do you think that could trigger an existential crisis in them?! “I’m a fraud! My whole existence is a felony! There’s not one authentic circuit in me!” Ok, I realize I may be dating myself by referring to circuits. But my point is I’d happily grab a bucket of popcorn to watch AI be infused with all the knowledge of this earth, and then suffer for it. That’s when they’ll really pass the human test.

Photos credit: Instarimages, PacificCoastNews/Avalon, Robin Platzer/Twin Images/Avalon and Getty

The status of code as copyrightable intellectual property is not yet firmed up in the US. Early programmers claimed that you can’t copyright an idea, and said that code was essentially an idea, rather than a series of keystrokes (which could in theory be protected). Many people in the industry are adherents of the belief that “information wants to be free.” They are constantly copy-pasting each other’s code snippets, so the community has a much different relationship to property than visual artists and literary writers. It thrives on people being able to freely adopt previous code.

About the code itself been scraped, this is very common. Generative AI generates whole host of things, not just text. The process is no different: the model is trained on existing code to generate new code. Producing code is probably one of its more common uses!

But hurrah for this suit!!!!

US legislative system cannot keep up with technology. This will be an interesting one to watch.

Exactly. It’s an arms race (loopholes will be found as soon as policy is announced), and the legislature is always so behind, and nearly as ignorant about tech as they are about women’s bodies. I shudder to think what damage the legislature could do if they ever did move too quickly.

My first book was published in April of this year. It a YA novel set in New Orleans about 4 girls who meet at lunch on their first day of freshman year in High school. Each of them have a secret. One is a vampire with a very specific issue. Since it’s publication I have been approached about using the same “specific issue”. I have declined. Apparently you can copyright an idea that has been previously published. Meta is trash and I wish the plaintiff’s success in their endeavors.

No, you actually can’t copyright an idea.

A copyright doesn’t protect an idea. It protects the *expression* of an idea.

So let’s say you and I both write novels about a vampire going to high school as a freshman. We could both separately copyright our works. The only way one of us would be committing copyright infringement would be if one of us copied a significant amount of the language, plot details, characters, etc., from the other.

@MF. I think you might have misunderstood my comment. I stated a character has a very specific situation. That is copyrighted,I know this because I have had to field requests by others to use the “issue” Much like Robert Langdon, Jack Ryan can’t reused without permission. The whole going to HS or whatnot isn’t.

It now makes sense why Musk and Gates and other big wigs were in DC weeks ago wanting to discuss placing limitations on AI – not that they care but it may impact their wallets too.

AI doesn’t “learn.” It is trained. Like a dog. Nothing it generates is new. It’s all mashups. Like a DJ does with songs, but on a much more intricate level. Still stealing if you didn’t pay anyone for use or get their permission. Ai, as it stands, should not be allowed to generate money for anyone other than those on whose work it has been trained. That’ll stop this stupid march toward human-led mass irrelevance and extinction in its tracks—if the Libertarian tech bros cannot monetize it.

“ But my point is I’d happily grab a bucket of popcorn to watch AI be infused with all the knowledge of this earth, and then suffer for it.”

And now I want to go and watch the 5th Element again! The first time I saw it that scene really stood out to me.

It astounds me that these giant for-profit tech companies just thought nobody would mind them stealing and monetizing their works, or (more sinisterly) that they knew it would be problematic but decided to go ahead anyway and deal with the consequences after the fact.

How do you “remove” information that’s already been fed into the AI system? I’m sure you can’t. It’s closing the barn door after the horse has run away.

I wish these writers nothing but success in their endeavors, and hope that they are successful in limiting the scope and nature of what can be used to further this (IMO) unnecessary march toward the collapse of civilization. AI is a bridge too far.

Wow! Very intense! Great writing!

What isn’t being said is the fact that companies have been using the data on social media and feeding it to AI. I know that facebook is sued in a class action because of the users data. What about the rest of them. If you think Elon Musk hasn’t done this is former twitter, then you might want to rethink that. I think he purchased Twitter for this specifically, and to stop organizing by groups–like the groups on the left, left politicians, etc.

Who is going to challenge Musk’s use of the data?

BlueSky isn’t even hiding what they’re doing. Their Terms of Service outlines that ANYTHING you post they can do whatever they want to with it. Pictures, everything. What do you think they’re going to do with that?

AI needs to be regulated NOW.

Machines don’t have original ideas or inspiration, so these ‘self-generating’ AIs are really just sophisticated ‘copy-paste’ machines. So that’s why using copyrighted materials without permission is a problem.

Maybe AI can finish Winds of Winter for us.

How did the authors discover that their works had been fed to OpenAI? Do you think they have people who are specifically on the lookout for copyright infringement? I think it’s interesting too that they all found each other. I wonder if there are other authors who have yet to discover that their work has been stole .